It has become a tradition: in October, we go to Essen in Germany for SPIEL. We have our habits, we know how things work, and we have a grand, if exhausting time. And it’s a great way to test a lot of things and to make opinions and quick reviews! And to come back with, well, a bunch of loot to enjoy between two editions of SPIEL 😉

The loot doesn’t reflect everything we played (and there’s a few things in there that are not a direct consequence of what we played in this edition), so here’s everything we saw!

Azul Duel

Azul is a classic, declined in many versions, and this version goes back to the idea of the first game, but in a 2-player version. The original Azul already plays pretty well with two players, but I still appreciated the adjustments to the rules that make it more tactical – a bit more choices that depend on the exact timing (and not only on the opportunity to deny something to your opponent), and I liked the idea of building your grid as you go. We’re not really on the market for 2-player games these days, but if we had been, it would have been under consideration for a buy (especially since we don’t own the original version).

Take Time

A cooperative game where players try to solve constraints of a round clock with the cards that they are given – but they can only discuss strategy before looking at said cards. Pretty neat, probably has the potential to get very difficult challenges – but it felt like, for us, it would fit the same niche as The Crew (which we both preferred). Very pretty, though, and probably shorter games than The Crew too.

Locus

Locus has a very clear (and acknowledged) inspiration from Ganz Schön Clever, except that instead of dice, you get cards with polyominoes – players take turn choosing one and crossing the corresponding patterns in the different grids, with a significant chance to have combos that yield more crossings on the sheet (and hence more points). I liked it well enough, I think it’s also less fiddly than Ganz Schön Clever, which has a few iffy corner cases that require going back to the rules a bit too often, but it wasn’t enough of a wow factor to get a copy.

Bohemians

Bohemians is a deck building game where the currency that you have to get new cards depends on the half-symbols that you manage to combine into full symbols when you get your cards, with additional actions on the better cards (and bad things happening to you if you forget to work to support your artist ways). Cool mechanics (I had previously enjoyed the symbol combination mechanic in After Us), pretty theme, and made by Portal Games, for which I have a personal fondness – we got a box. And with the box I also got the third book of Ignacy Trzewiczek “Boardgames that tell stories” (I had enjoyed the first two) and got it signed since he was around when I got it 🙂

Postcards

I liked the theme of Postcards – traveling through France and sending postcards – that sounded nice; and the first random postcard that I started the game with depicted Carbonnade Flamande (a personal favorite); but the mechanics of the game (filling in a few tasks, getting the opportunistic bonus and some set collection) fell a bit flat for us.

A Carnivore Did It!

A Carnivore Dit It! is a cooperative deduction game where you try to solve series of puzzles where you get cards with animals, some statements where you know that a number of them are true (and the rest are false) and try to deduct as a group who’s the culprit. Fun times, a lot of scenarios to go through, and my love of logical puzzles made me grab a copy.

Railroad Tiles

I enjoyed Railroad Ink (a series of roll&writes where you make a rail and road network), so I was very curious about the tile version of it. Players get a set of tiles to add to their network. During the game, they also get to add trains, cars and commuters to their network and score points accordingly; a few bonus tiles and the largest rectangular area of the board round up the final score. This sort of things tickles my brain exactly right, so we got a copy, even if Pierre was less convinced than I was (but then: it has a solo mode!)

Sanibel

Sanibel is a set collection game with a placement on a board and a seashell theme. I liked the theme and the different shapes of the tiles (diamonds and hexagons made of three diamonds), but again not enthusiastically so. And the table was a bit too small, which led to an incident of board flipping that was not very pleasant 😛 (Not the fault of the game, though 🙂 )

Wispwood

Wispwood is a tile laying game where, at each turn, players choose a colored tile from a common reserve and one of the polyominoes next to it to build an increasingly large grid that eventually gets scored along multiple axes. We didn’t get to play it, only got the explanation, but I’m curious about the feel of the game and we’ll try to find an open table in the next few days.

EDIT and we did find a table, and it was indeed delightful, and we bought a box.

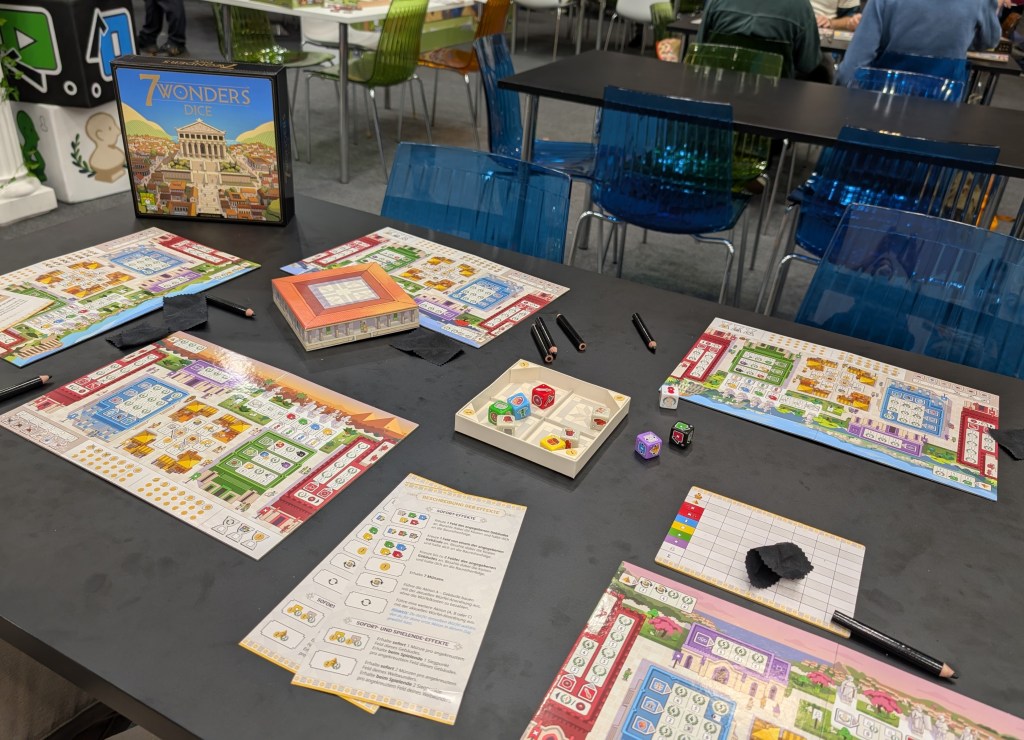

7 Wonders Dice

In the 7 Wonders Extended Universe, we know have: the dice game! This is a roll and write – at every round, a set of dice is rolled, of which each player chooses one (at the same time) and adds it to their player board for various effects. We could only play for a few numbers of rounds since it was the very end of the day, but again the overall feeling wasn’t enthusiastic.

The Hanging Gardens

The Hanging Gardens is a tile laying game where you try to make gardens irrigated in the same way as your objective card while adding animals, plants, humans, and try to optimize the associated points. Pretty neat, but lacked a bit of a “wow” factor.

Castle Combo

Castle Combo is a tableau-building game where players build a 3×3 grid of characters that interact in various ways to yield points. It was released last year, but there was an extension this year, which gave us the opportunity to play it; the publisher wasn’t selling the base box today, but it’s going to get on my next shopping list because I really enjoyed it – it’s fast, every decision matters, and it’s tight and well made.

City Tour

City Tour may well have been designed as “what if Tsuro, but cooperative” – all the players are driving the same bus by adding tiles at the end of its path, gathering passengers and dropping them at various points. Cute, and the box seems to have more options than the basic version we played, but not much more than that for us.

Ladybugs

Ladybugs was the unexpected territory control game of the day – you play as a colony of ladybugs that try to control fields of daisies. When putting a ladybug on the field, you get to place it at a distance equal to the numbers of dots on one of the previous ladybugs you have placed, orthogonally; this yields a nice puzzle to be able to place your bugs while planning your next moves. Pretty cool, not a buy.

Restart

Restart was the most puzzling game we played on Friday. It presents itself as a riff between Rummikub and Uno; players place numbered tiles in increasing rows, with a few special tiles allowing them to manipulate the board a bit. Whether it was because we were only two players or because we weren’t playing aggressively enough, neither of us got the point of the game (which doesn’t happen often!)

INK

In INK, players try to get rid of their ink bottles by placing them on a tiled board that they build during the game – when an area is large enough, you get to place ink bottles on the few places in that area that welcome them. Larger areas yield bonuses that can create small combos. It was a very pleasant game and the ink bottles were adorable and I’m looking forward to playing it again with the box we bought.

Cosmolancer

Cosmolancer is the new edition of a 1994 game (something we learnt after playing it 🙂 ). Players take turns placing score tiles and scoring tokens on a board to maximize their own score and minimize their opponent’s. Good design, tight game, a couple of things that we didn’t necessarily fully understand/score correctly during our game. But also not something we’d expect to put on the table.

Dying Message

The accidental social game of the day. In Dying Message, one player plays the murder victim and the others the detectives. The murder victim has a set of abstract cards to communicate who killed them from beyond the grave, and the detectives must decide, given a list of suspects, who the victim may be talking about. I took the role of the murder victim, and all my detectives failed at understanding me 😦 This might be fun with the right group, but we don’t think we’re necessarily part of that type of group 🙂

TRND

Trnd is a game where you try to collect the largest possible set of a certain type of chairs while discarding the rest. And you can only discard identical cards that have a common characteristic (color or shape) with the current discard. Intriguing, but not quite enough to make us get a box.

Knitting Circle

In Knitting Circle, players create clothes from squares of wool that their cats bring them. There’s two phases in each round: moving cats around a wheel to get wool pieces, and assembling said wool pieces to clothes, with various bonuses for specific colors, patterns or shape. Cute, and the switch between player-interaction phases to get materials and more solitaire puzzle during assembly is pleasant. Didn’t click quite enough to get a box though.

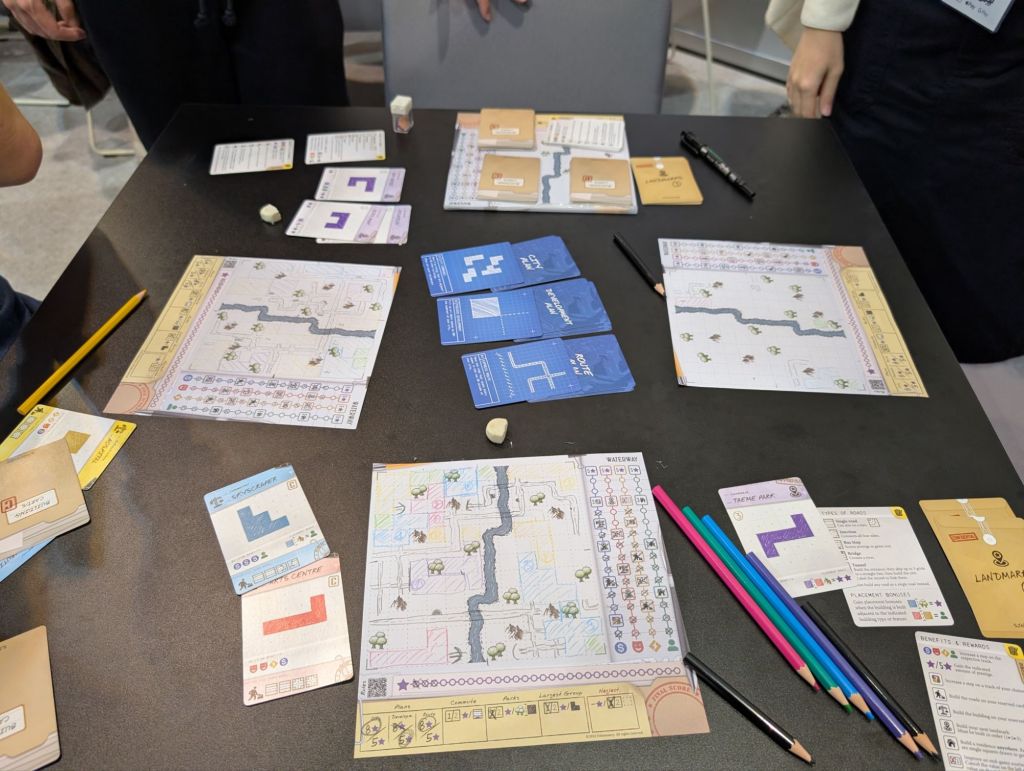

Scribble City

Scribble City is a game where you draft cards to add roads and polyomino buildings to a map. A few objectives and additional bonuses make it a bit more strategic, and overall this would have been a buy… if their shipment had arrived in time for Essen! As it is, I’ll probably try to get a copy at some point still.

R.A.V.E.L.

Ravel is a solo (or “two people working the problem together”) game where you have a set of dice, constraints on how you can move/change them, and objective to fill. It’s a very nice puzzle, it has a few ways to adjust the difficulty (we were either very lucky or very good at it today), and it’s delightful. I got a box.

Hues and Cues

Hues and Cues is a party game where someone tries to give clues for the other players to guess a color. The closer people are from the color to be guessed, the more points the clue giver and the guessers get. We had a few laughs in the couple of rounds that we played – including with someone who was very colorblind but evidently surprisingly good at color games 😀 Fun to play, but not a fit for our usual games.

Just 3 Folds

Just 3 Folds is an origami game. You get a picture and a piece of colored paper, and you have to re-create the picture by folding the piece of paper only three times. The additional twist is that you’re actually creating only a quarter of the image, and check your work by putting it against an angle mirror. Cute concept, and we got a copy – it may work for us under some circumstances.

Flip 7

We hadn’t played Flip 7 yet, which is now fixed! It’s a push your luck game, described as “a mix of black jack and Uno”. You can continue getting cards as long as you wish, but if you get two identical ones, you lose (and the winner is the one with the largest score). Pretty fun and the Deluxe edition (which we played) is gorgeous, but the game itself doesn’t necessarily warrant the price point of said deluxe edition. That said, might get a deck of the regular edition at some point – looks like an easy filler game.

The Last Droids

In The Last Droids, you get cards that you can either buy for their effects or recycle to get resources to buy said cards. It’s a post-apocalyptic theme where you try to rebuild houses, and the cards are defunct robots (that you can either repair for actions or recycle). The originality comes from the four-player game, in which players team up in two teams, and draft cards, giving the other one, depending on the turn, to their adversary or to their partner. I really really enjoyed it and I got a box. I’m curious to see how it plays with other numbers of players too!

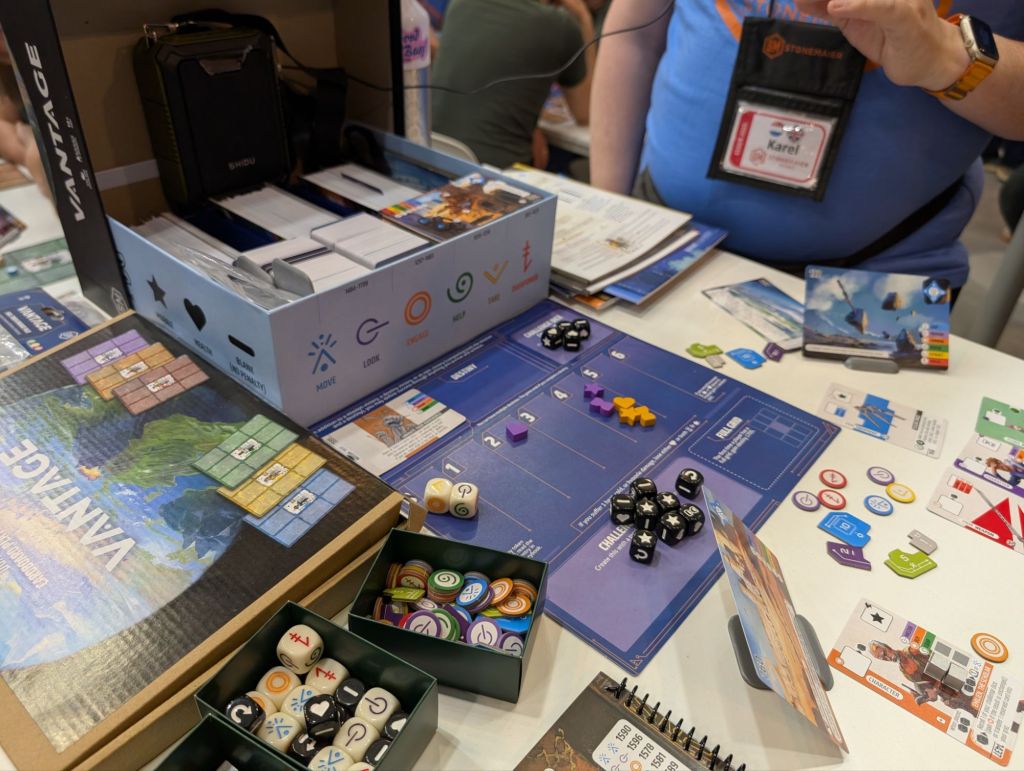

Vantage

We only got a table explanation of this game and we have very little chance of getting a table, but it looks quite fascinating. It’s an open world exploration game where players are (in game) physically separated but can communicate to achieve a common mission; there’s a metric ton of cards and it’s NOT a campaign game, just a game with a lot of randomization of the starting conditions. I’m VERY curious and I’d love to give it a try (but it’s also sold out, so even the leap of faith is not an option).

Propolis

Propolis is a game with bees (and you get a bunch of beeples) where you collect resources to build cards that give you discounts for future buys. It is very reminiscent of Splendor, with a few more strategic elements, and it’s really nice, but not distinct enough niche-wise from Splendor to my taste to warrant a buy.

Carnival of Sins

Carnival of Sins looks pretty neat, concept-wise – each player has a hand of 7 cards that they will play entirely to get dice that are rolled at the beginning of the round, with various cards effects (get the highest die, get an odd and even die, that sort of things), with a few backstabby effects too. The cards are also very pretty. But we did run in at least 3 corner cases that were not mentioned in the rulebook during our game, which made it feel a bit incomplete, rule-wise.

Into the Machine

Into the Machine is a very efficient combination of racing game (you want to move your markers as fast as possible to the end) and worker placement (by making actions on a board where space is limited). Very very enjoyable, good iconography – it was a prototype so no copies to buy but it may be in next year’s list.

Skybridge

Table demo only for Skybridge, a game where players try to build a bridge in the sky with the hell of various characters and gods. It did look interesting, but not enough to try to fight for a table on our last, shorter day.

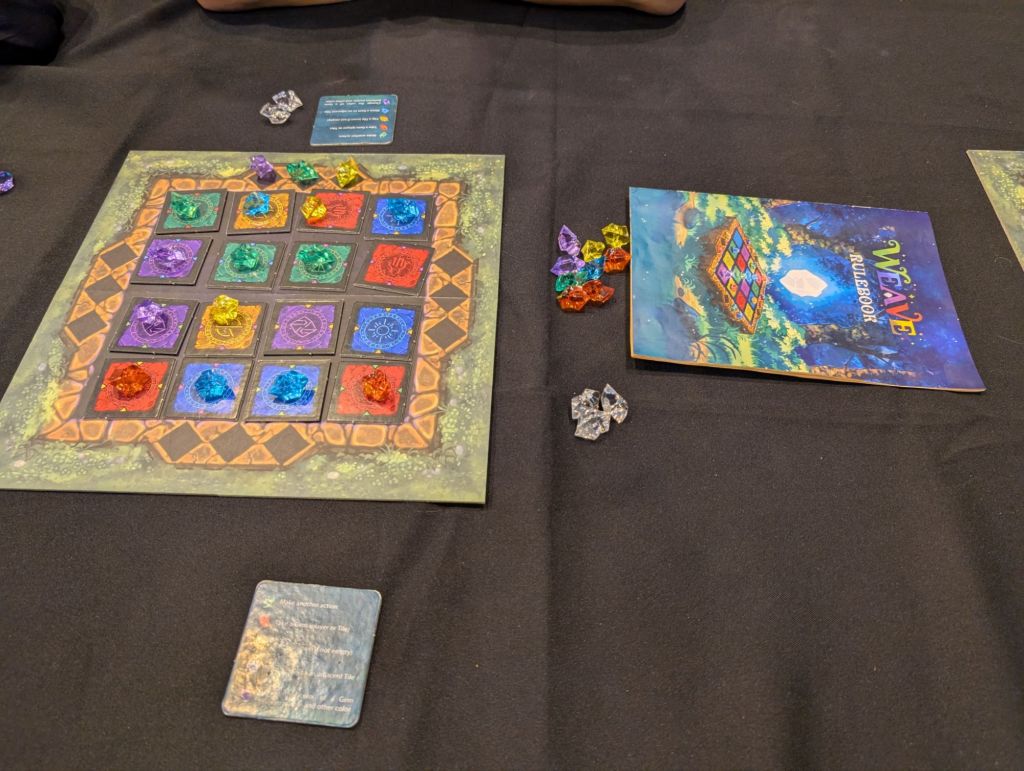

Weave

We played Weave at two players, and we suspect it’s best for two players – but it was very good at two players. You’re both playing on the same grid, trying to make alignments of gems on the correct color of tile with three actions: pick a gem, place a gem, flip a tile (to get another, known color). If you place a gem on a tile of the same color, you get an extra action depending on said color leading to fun combos. First player to three points (which happens when an action creates a line) wins the game. Very pleasant, and we got a copy (and I’ll update the BoardGameGeek link when it’s out of the processing queue 🙂 ).

Five Families

In Five Families, players play mafia families trying to control boroughs of New York in the 1930s. They try to claim territory and, if they manage to transform their claim, control it, usually at the cost of people (canonically “injured”) and/or money. Areas are grouped into sets of threes on which majority of control is computed for extra points. I liked it a lot for the first half of the game, and then it felt like it was getting quite long. I suspect that we were not playing the game aggressively enough for it to quite work.

Kingdom Crossing

Kingdom Crossing was high on my list of games I wanted to look at this year, and it almost didn’t work out (but we found a table as the last thing we played this year!). There’s no way I’m going to resist “we took the 7 bridges of Königsberg and made a board game with that”. Add to that cute animals, a solid engine building mechanism and action selection, and you get a box I’m happy to have in my collection 🙂